Short Summary

This post is for architects, DevOps people to:

- Address the importance of using the correct setup of different network tools in Kubernetes clusters and Kubermatic Kubernetes Platform (KKP)

- In order to avoid a bottleneck in the IT infrastructure that affects response times

Quick Network Response Times Have Become the Standard

33 milliseconds - The amount of time needed for a piece of information to travel from Spain to the U.S. and back via the Marea undersea cable. This is part of the technology today that enables high-speed and high-definition communications all over the world.

Quick response times have become a standard end-user expectation, so the speed of communications inside IT infrastructures can’t be bottlenecked.

This has to be considered when IT infrastructures are being designed and operations are being optimized. It means that we have to evaluate and decide on suitable tools for networking in Kubernetes clusters and in the Kubermatic Kubernetes Platform. We’ve done a lot of benchmarking to see which setup performs the best.

The Toolset for Network Benchmarking in Kubernetes

Baseline

Two things had to be decided before actual benchmarking:

- The tools and use cases we wanted to evaluate as benchmarking subjects

- Which tools would help us perform the actual measurements

Applied measurements

First, we measured the time required to create pods in the Kubermatic Kubernetes Platform based on various numbers of pods and nodes. This was independent of the tools used to communicate between the clusters, but part of optimizing the cluster for better response times. We wanted to determine the relationship between a pod’s start up time with various amounts of node and pod setups.

Second, by measuring the internal communications in a cluster, we compared the performance of different Container Network Interface (CNI) platforms like Cilium and Canal to the Kubernetes user cluster network performance in the Kubermatic Kubernetes Platform.

Last but not least, for control-plane to cluster connections, we compared how OpenVPN and Konnectivity perform when applying APIServer to node communication benchmarks.

OpenVPN and Konnectivity are needed because of the unique architecture of the Kubermatic Kubernetes Platform (KKP). Basically, Kubernetes control-plane components are detached from the workload and are present in two different clusters; workload exists in user clusters, while control plane components live in the master / seed cluster. This allows us to utilize as many resources as needed for the actual workloads, and create several, more efficient Kubernetes clusters that work together. At the same time, we keep the latency as low as possible, with OpenVPN and Konnectivity being responsible for the connection between the master / seed cluster and the user clusters.

Benchmarking tools

We used Kubernetes Netperf which includes iperf, and we added qperf support to it. Because these can’t benchmark Konnectivity, we also built Benchmate for the measurements.

Benchmarking of the Kubermatic Kubernetes Platform

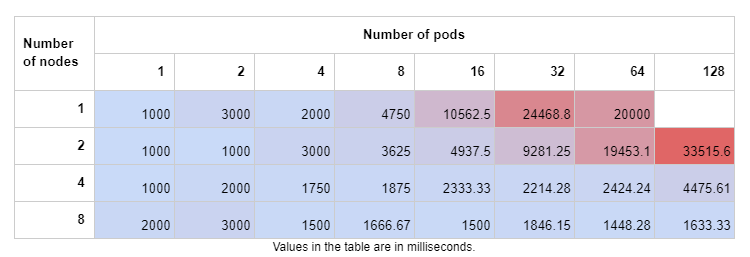

Kubernetes pod creation time

The table below shows the average latency in milliseconds to get pods up and running. This benchmark was created on a cluster that contained nodes with 2 CPUs and 2GB of RAM memory.

As you can see, a higher proportion of pods / nodes results in greater latency when starting up those pods.

Kubernetes cluster communication performance between nodes and pods

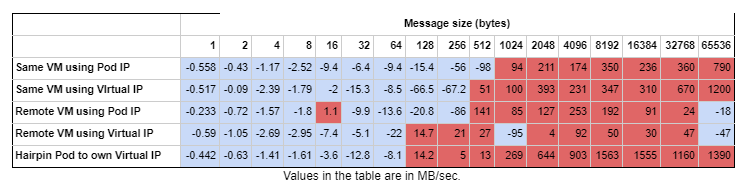

We measured throughput and latency values in different setups for the cluster communications benchmark. As previously mentioned, Cilium and Canal are part of this benchmarking.

In the table below, you can see the throughput differences between the 2 tools in various setups. Values were calculated based on the message throughput of Cilium minus the throughput of Canal. If there is a positive value in a red cell, Cilium is the winner, because it has a higher throughput value in that particular setup with its message size. If, however, there is a negative value in a blue cell, Canal has a higher throughput there. The platform with a higher throughput performs better in different cases.

This measurement was taken on multiple Amazon EC2 t3a.xlarge virtual machines with 4 CPUs and 16GB of RAM memory, and made by qperf where the throughput difference is in MB/sec.

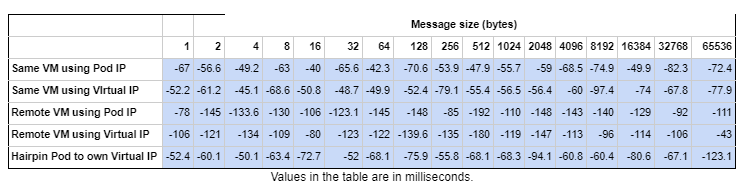

The next measurement shown below was also taken on an Amazon EC2 t3a.xlarge machine with 4 CPUs and 16GB RAM of memory, and made by qperf. The only difference here is the unit of measure, which is in milliseconds, because it contains latency value differences.

The setups were the same, and we deducted Canal values from Cilium, so that if there was a positive value in a red cell, Cilium had a higher latency in that particular setup, with its message size. If, however, there was a negative value in a blue cell, Canal had higher latency. As you can see, Canal had higher latency in literally every case. This also applies conversely; higher latency means worse performance, which means that Cilium is the absolute winner here.

Kubernetes control-plane and cluster communication

Kubemratic Kubernetes Platform has an architecture in which the control-plane components reside on a different cluster from the workloads. We call this the master cluster, and all workloads present on the so-called user cluster. That’s why it’s important to handle these separately when it comes to internal cluster communication.

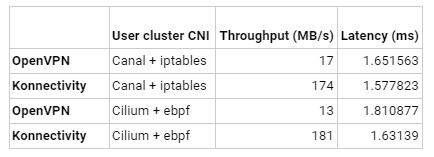

We benchmarked control-plane cluster communication to estimate the throughputs and latencies between APIserver and the nodes. Both OpenVPN and Konnectivity were benchmarked, and the results can be seen in the table below.

Konnectivity had slightly better latencies, but much higher throughput than OpenVPN. Konnectivity is clearly a better tool, based on these two parameters.

What Is the Optimal Kubermatic Setup?

Firstly let’s go back to the pods’ creation time. Based on this experiment, we’ve seen that the more pods you want to occur quickly, the more nodes you’ll need in your cluster.

Cilium performed a bit worse in possible throughput when smaller messages were being transported, but won out when it came to bigger message sizes.

Considering that Cilium was the absolute winner of the latency contest, it can be a better choice than Canal, especially when bigger messages need to be transported.

In the end, Konnectivity appears to be the undisputed champion of the control-plane and cluster communication tools, so using Konnectivity and Cilium in your cluster is a great combination. Just keep in mind that when choosing the tools for your cluster, other parameters besides the ones we measured may also be relevant in your environment.

Contact us to help you create high performance Kubernetes clusters for your enterprise with our powerful Kubermatic Kubernetes Platform.

Learn More

- Video: See Cilium CNI and other cool features in action

- Get Started With KKP Easily: start.kubernetes

- Check out KKP’s huge Technology Integrations