With Kubermatic Kubernetes Platform 2.19, we introduced support for the Cilium CNI for both, the enterprise and the community edition of our platform.

Why? Because we think the Cilium folks are currently building the best open source networking platform for Kubernetes. Cilium provides, secures and observes network connectivity between container workloads - cloud native, and fueled by the revolutionary Kernel technology eBPF. When testing the performance of Canal vs. Cilium, Cilium performed up to 30% better than Canal when running in ordinary Kubernetes clusters on the same hardware. Sure thing we wanted to make this easily available to our users.

In this blog post, I’ll show you how Cilium and KKP users can benefit from this integration before deep diving into how to deploy a KKP cluster with Cilium CNI and exploring the traffic.

Benefits of Cilium CNI for KKP Users

Cilium is a CNCF incubating project that provides, secures and observes network connectivity between container workloads in a truly cloud native way. At the foundation of Cilium is a new Linux kernel technology called eBPF, which enables the dynamic insertion of powerful security, visibility, and networking control logic into the Linux kernel.

The main benefits of the Cilium CNI for KKP users include:

Transparent Observability

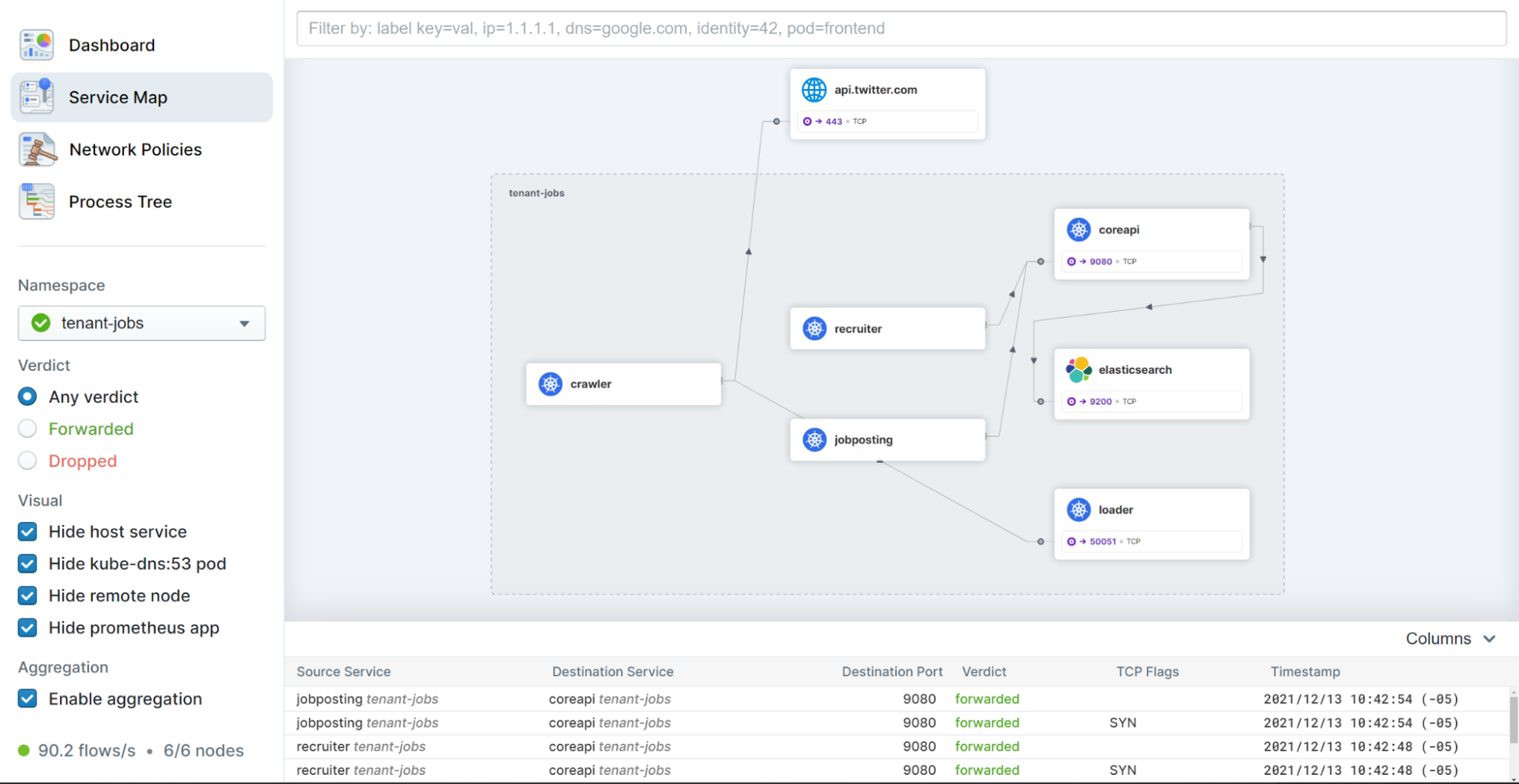

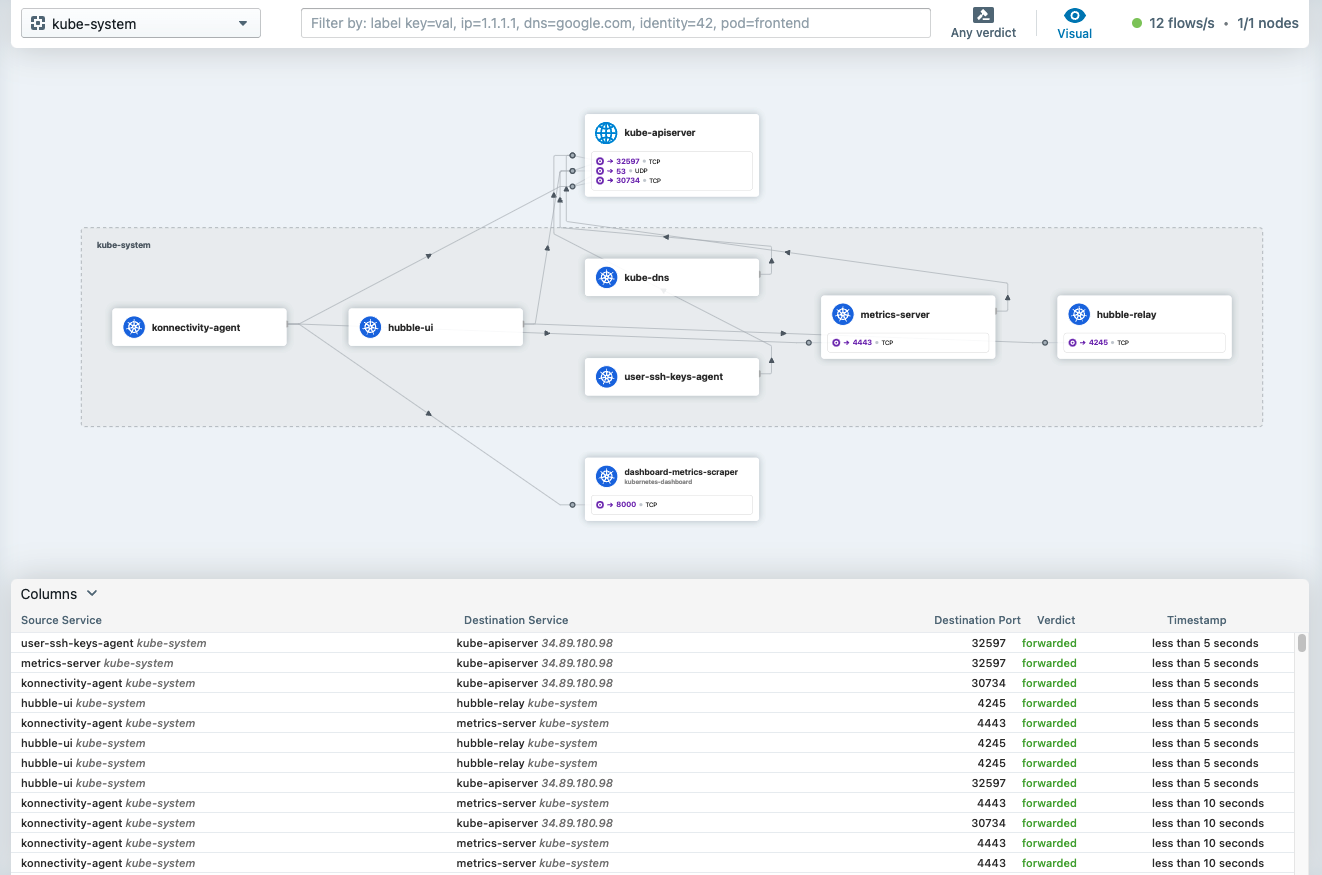

As part of the Cilium integration in KKP, users can easily enable the Hubble add-on in their clusters. Hubble is an observability platform built on top of Cilium and eBPF to enable deep visibility into the communication and behavior of services in a completely transparent manner. By relying on eBPF, all visibility is programmable and allows for a dynamic approach that minimizes overhead while providing deep and detailed insight as required by users.

Hubble comes with a a web user interface as that can be used to investigate networking issues in a much faster and convenient manner than for example by performing tcpdump traces manually at various places in the cluster:

Even if the Hubble UI is not installed in the cluster, the Hubble backend is enabled in the kubermatic clusters by default. The data that it collects can be used to trace traffic via the powerful Hubble CLI. For example, to observe all recent communication of a pod, you just need to type hubble observe --pod <pod-name>.

Service Load-Balancing Without Kube-Proxy

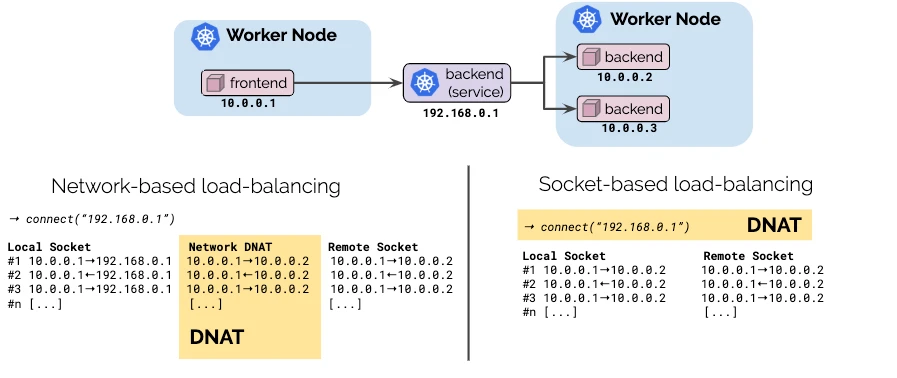

Service load-balancing in Kubernetes clusters is based on destination network address translation (NAT) of virtual service IP addresses to actual endpoint pod IP addresses. In traditional Kubernetes clusters this is being performed by the kube-proxy component of Kubernetes, which runs on each worker node and configures NAT on the node either via iptables or IPVS. The kube-proxy way of performing networking address translation of the packets in the cluster can be quite resource intensive especially at larger scales, which may impact the network throughput and latency.

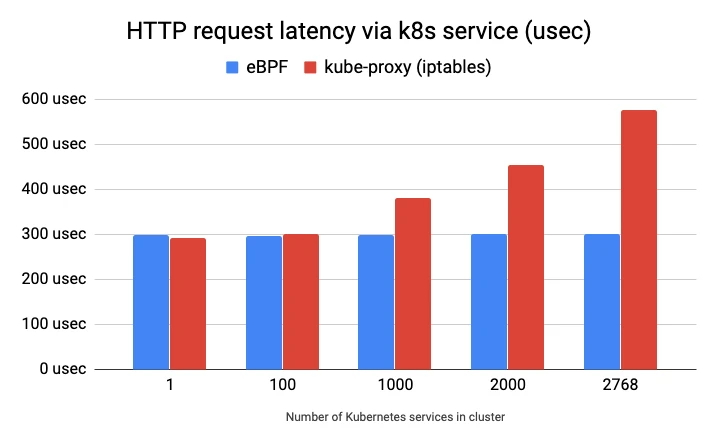

Cilium provides an eBPF based alternative to iptables and IPVS mechanisms implemented by kube-proxy with the promise to reduce CPU utilization and latency, improve throughput and increase scale.

As shown on the graph below, with the eBPF kube-proxy replacement, the HTTP request latency stays almost constant by increasing the number of Kubernetes services in the cluster. That is not the case for the iptables kube-proxy mode, which has scaling issues:

(Source: https://cilium.io/blog/2019/08/20/cilium-16)

With Cilium eBPF kube-proxy replacement, the destination NAT happens at the socket level, before the network packet is even built by the kernel. The first packet to leave the client pod already has the right destination IP and port number, and no further NAT happens on its way to the destination pod. That means that the kube-proxy component does not even need to run on the worker nodes.

(Source: https://cilium.io/blog/2019/08/20/cilium-16)

Advanced Security

Apart from standard Kubernetes network policies working on layer 3, Cilium also uses its identity-aware and application-aware visibility to enable both DNS-based policies (e.g. allow/deny access to *.google.com) and application-based policies (e.g. allow/deny HTTP GET /foo). With the recently released Tetragon project security observability is also possible to monitor and detect process and syscall behavior.

Advanced Networking Features

Besides the already described benefits, eBPF allows Cilium to implement many more advanced networking features. These might help with the future evolution of KKP in the networking area. KKP team members are actively evaluating them for integration in future KKP releases. The features that got our interest and may be integrated with KKP at some later point include:

- IPv6 / Dual-Stack support

- Native support for LoadBalancer services with BGP

- Sidecarless eBPF-powered Service Mesh

- Multi-cluster connectivity with Cilium Cluster Mesh

- Transparent Encryption

Great Community Support

Last but not least, a very important benefit of CIlium CNI is its wide adoption across the cloud-native community. Cilium is a CNCF incubation level project, has hundreds of contributors from different parties and has been chosen as the CNI of choice by many big public cloud providers.

This assures that you will not be left alone with your issues, and potential bugs can be fixed very promptly.

Benefits for Cilium Users

By Integration of Cilium into Kubermatic Kubernetes Platform, Cilium gets an easy way of deployment into any of the KKP-supported infrastructure providers in cloud, on-premises or on edge. The supported infrastructure providers include:

- AWS

- Google Cloud

- Azure

- OpenStack

- VMWare vSphere

- Open Telekom Cloud

- DigitalOcean

- Hetzner

- Alibaba Cloud

- Equinix Metal

- KubeVirt

- Kubeadm

Since Kubermatic Kubernetes Platform also manages the necessary infrastructure setup, starting a new Kubernetes cluster with Cilium CNI in any of the above mentioned providers is just a matter of a few minutes. Even in hybrid cloud provider scenarios with clusters scattered across different providers, the whole setup can be managed from the single self-service web portal and the end user experience is always the same.

By leveraging Cluster-API and Kubermatic machine-controller, the whole lifecycle of the worker nodes running on any cloud provider can be managed declaratively via Kubernetes Custom Resources, which helps to avoid vendor lock-in.

Lab: Deploying KKP Cluster With Cilium CNI and Exploring the Traffic

Now let’s check out how this looks in real life: let’s deploy a new KKP cluster with Cilium as the CNI!

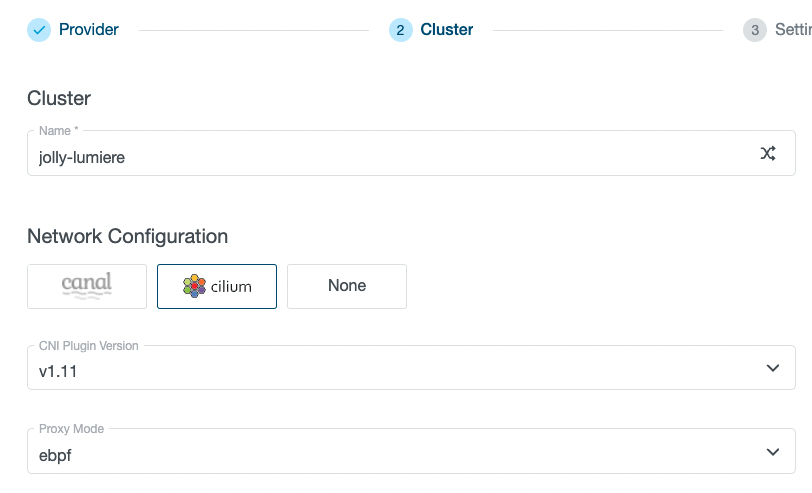

When creating a new cluster in KKP wizard, Cilium CNI and eBPF proxy mode can be selected on the Cluster Details page:

Few minutes later, after the cluster is deployed, you can observe the running pods in the kube-system namespace. As you can see, since we selected the eBPF proxy mode, kube-proxy is not deployed as service load-balancing is performed by cilium:

$ kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

cilium-nh9l4 1/1 Running 0 2m51s

cilium-operator-5757cd4d9f-mtsqp 1/1 Running 0 5m39s

coredns-767874cf84-9grjx 1/1 Running 0 5m39s

coredns-767874cf84-xpwl5 1/1 Running 0 5m39s

konnectivity-agent-69df85675-mft5d 1/1 Running 0 5m39s

konnectivity-agent-69df85675-rhzjg 1/1 Running 0 5m39s

metrics-server-7c94595b7c-7qq7s 1/1 Running 0 5m39s

metrics-server-7c94595b7c-lkkwg 1/1 Running 0 5m39s

node-local-dns-b4k9g 1/1 Running 0 2m51s

user-ssh-keys-agent-bwgkg 1/1 Running 0 2m51s

Note that you can not see any kubernetes control plane components like apiserver or etcd in the cluster. Those are running in the Seed cluster of the Kubermatic platform and are not visible for cluster end-user. Konnectivity-agent is the component which connects the worker nodes with the remote k8s control plane running in Seed.

Next, we can enable the Hubble addon for network observability. To do that via the KKP web user interface, select the Addons tab on the Cluster Details page, click on the “Install Addon” button and select “hubble”:

Few moments later, you can see that Hubble components are now running in the cluster as well:

$ kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

cilium-nh9l4 1/1 Running 0 4m3s

cilium-operator-5757cd4d9f-mtsqp 1/1 Running 0 6m51s

coredns-767874cf84-9grjx 1/1 Running 0 6m51s

coredns-767874cf84-xpwl5 1/1 Running 0 6m51s

hubble-generate-certs-4a617efdba-spd4n 0/1 Completed 0 44s

hubble-relay-56d45f97f8-rcc4s 1/1 Running 0 44s

hubble-ui-54fb86b9f4-6gtk7 3/3 Running 0 44s

konnectivity-agent-69df85675-mft5d 1/1 Running 0 6m51s

konnectivity-agent-69df85675-rhzjg 1/1 Running 0 6m51s

metrics-server-7c94595b7c-7qq7s 1/1 Running 0 6m51s

metrics-server-7c94595b7c-lkkwg 1/1 Running 0 6m51s

node-local-dns-b4k9g 1/1 Running 0 4m3s

user-ssh-keys-agent-bwgkg 1/1 Running 0 4m3s

It is now possible to connect to the Hubble UI either using the cilium hubble ui CLI, or port-forwarding to it manually, e.g. by: kubectl port-forward -n kube-system svc/hubble-ui 12000:80 and navigating to the http://localhost:12000 address in your web browser:

Even without installing the Hubble addon in KKP, it is still possible to manually execute into the cilium pods running in the cluster and and Hubble to observe the traffic via hubble CLI, e.g.:

$ kubectl exec -it cilium-nh9l4 -n kube-system -c cilium-agent -- hubble observe

May 24 15:12:35.302: 172.25.0.53:40086 <- kube-system/metrics-server-7c94595b7c-7qq7s:4443 to-stack FORWARDED (TCP Flags: SYN, ACK)

May 24 15:12:35.302: 172.25.0.53:40086 -> kube-system/metrics-server-7c94595b7c-7qq7s:4443 to-endpoint FORWARDED (TCP Flags: ACK)

May 24 15:12:35.303: 172.25.0.53:40086 -> kube-system/metrics-server-7c94595b7c-7qq7s:4443 to-endpoint FORWARDED (TCP Flags: ACK, PSH)

May 24 15:12:35.307: 172.25.0.53:41996 <- kube-system/metrics-server-7c94595b7c-lkkwg:4443 to-stack FORWARDED (TCP Flags: ACK, PSH)

May 24 15:12:35.310: 172.25.0.53:41996 -> kube-system/metrics-server-7c94595b7c-lkkwg:4443 to-endpoint FORWARDED (TCP Flags: ACK, FIN)

May 24 15:12:35.310: 172.25.0.53:41996 <- kube-system/metrics-server-7c94595b7c-lkkwg:4443 to-stack FORWARDED (TCP Flags: ACK, FIN)

May 24 15:12:35.310: 172.25.0.53:41996 -> kube-system/metrics-server-7c94595b7c-lkkwg:4443 to-endpoint FORWARDED (TCP Flags: ACK)

May 24 15:12:35.311: 172.25.0.53:40086 <- kube-system/metrics-server-7c94595b7c-7qq7s:4443 to-stack FORWARDED (TCP Flags: ACK, PSH)

May 24 15:12:35.313: 172.25.0.53:40086 -> kube-system/metrics-server-7c94595b7c-7qq7s:4443 to-endpoint FORWARDED (TCP Flags: ACK, FIN)

May 24 15:12:35.314: 172.25.0.53:40086 -> kube-system/metrics-server-7c94595b7c-7qq7s:4443 to-endpoint FORWARDED (TCP Flags: RST)

Summary

By leveraging Cilium and Kubermatic Kubernetes Platform, users can benefit from advanced Kubernetes automation across any multi-cloud, on-prem and edge environment backended and secured by best-in-class networking connectivity. We hope you enjoy the ease with which the two integrate and always look forward to hearing your feedback and exchanging ideas via Slack or Github.

Learn More

- Check out the Cilium community page to learn more about eBPF-based networking

- Find Cilium on Github

- Get started with Kubermatic Kubernetes Platform Community Edition with our GitOps wizard